Cybersecurity Snapshot: A ChatGPT Special Edition About What Matters Most to Cyber Pros

Since ChatGPT’s release in November, the world has seemingly been on an “all day, every day” discussion about the generative AI chatbot’s impressive skills, evident limitations and potential to be used for good and evil. In this special edition, we highlight six things about ChatGPT that matter right now to cybersecurity practitioners.

1 - Don’t use ChatGPT for any critical cybersecurity work yet

Despite exciting tests of ChatGPT for tasks such as finding coding errors and software vulnerabilities, the chatbot’s performance can be very hit-or-miss and its use as a cybersecurity assistant should be – at minimum – manually and carefully reviewed.

For instance, Chris Anley, NCC Group’s chief scientist, used it to do security code reviews and concluded that “it doesn’t really work,” as he explained in the blog “Security Code Review With ChatGPT.”

Generative AI tools at best produce useful information that’s accurate between 50% to 70% of the time, according to Jeff Hancock, a faculty affiliate at the Stanford Institute for Human-Centered AI. They also outright make stuff up – or “hallucinate,” he said in the blog post “How will ChatGPT change the way we think and work?”

Interestingly, a recent Stanford study found that users with access to an AI code assistant “wrote significantly less secure code than those without access,” while ironically feeling more secure in their work. Meanwhile, in December, Stack Overflow, the Q&A website for programmers, banned ChatGPT answers because it found too many to be incorrect.

In fact, OpenAI, ChatGPT’s creator, has made it clear that it’s still early days for ChatGPT and that much work remains ahead. "It's a mistake to be relying on it for anything important right now," OpenAI CEO Sam Altman tweeted in December, a thought he’s continually reiterated.

More information:

- “What You Need to Know About OpenAI's New ChatGPT Bot - and How it Affects Your Security” (SANS Institute)

- “ChatGPT Subs In as Security Analyst, Hallucinates Only Occasionally” (Dark Reading)

- “Where AI meets cybersecurity: Opportunities, challenges and risks so far” (Silicon Angle)

- “ChatGPT - Learning Enough to be Dangerous” (Cyber Security Agency of Singapore)

VIDEOS

ChatGPT: Cybersecurity's Savior or Devil? (Security Weekly)

Tenable CEO Amit Yoran discusses the impact of AI on cyber defenses (CNBC)

2 - By all means check out ChatGPT’s potential

While ChatGPT isn’t quite ready for prime time as a trusted tool for cybersecurity pros, its potential is compelling. Here are some areas in which ChatGPT and generative AI technology in general have shown early – albeit often flawed – potential.

- Incident response

- Training / education

- Vulnerability detection

- Code testing

- Malware analysis

- Report writing

- Security operations

"I'm really excited as to what I believe it to be in terms of ChatGPT as being kind of a new interface," Resilience Insurance CISO Justin Shattuck recently told Axios.

However, a caveat: If you’re feeding it work data, check with your employer first what’s ok and not ok to share. Businesses have started to issue guidelines restricting and policing how employees use generative AI tools. Why? There’s concern and uncertainty about what these tools might do with data entered into them: Where does that data go? Where is it stored and for how long? How could it be used? How will it be protected?

More information:

- “How ChatGPT is changing the way cybersecurity practitioners look at the potential of AI” (SC Magazine)

- “ChatGPT for Blue Teams and Analysis” (System Weakness)

- “I Used GPT-3 to Find 213 Security Vulnerabilities in a Single Codebase” (Better Programming)

- “Accenture Exec: ChatGPT May Have Big Upside For Cybersecurity” (CRN)

- “An Analysis of the Automatic Bug Fixing Performance of ChatGPT (Johannes Gutenberg University)

VIDEOS

How I Use ChatGPT as a Cybersecurity Professional (Cristi Vlad)

ChatGPT For Cybersecurity (HackerSploit)

3 - Know attackers will use ChatGPT against you – or maybe they already have

Although ChatGPT is a work in progress, it’s good enough for the bad guys, who are reportedly already leveraging it to improve the content of their phishing emails and to generate malicious code, among other nefarious activities.

“The emergence and abuse of generative AI models, such as ChatGPT, will increase the risk to another level in 2023,” said Matthew Ball, chief analyst at market research firm Canalys.

Potential or actual cyberthreats related to abuse of ChatGPT include:

- Helping to craft more effective phishing emails

- Leveraging it to generate hateful, defamatory and false content

- Lowering the barrier to entry for less-sophisticated attackers

- Using it to create malware

- Directing people to malicious websites from a hijacked AI chatbot

For more information about malicious uses of ChatGPT:

- “ChatGPT-powered cyberattacks expected” (GCN)

- “How hackers can abuse ChatGPT to create malware” (TechTarget)

- “OpenAI's new ChatGPT bot: 10 dangerous things it's capable of” (BleepingComputer)

- “Does ChatGPT Pose A Cybersecurity Threat?” (Forbes)

- “4 Cybersecurity Risks Related to ChatGPT and AI-powered Chatbots” (CompTIA)

- “ChatGPT gets jailbroken … and heartbroken?” (Tenable)

VIDEOS

Widely available A.I. is ‘dangerous territory,’ says Tenable’s Amit Yoran (CNBC)

I challenged ChatGPT to code and hack: Are we doomed? (David Bombal)

4 - Expect government regulatory engines to rev up

We’ll likely see a steady stream of new regulations as governments try to curtail abuses and misuses of ChatGPT and similar AI tools, as well as to establish legal guardrails for their use.

That means security and compliance teams should keep tabs on how the regulatory landscape takes shape around ChatGPT and generative AI in general, and how that will impact how these products are designed, configured and used.

“We also need enough time for our institutions to figure out what to do. Regulation will be critical and will take time to figure out,” OpenAI’s Altman tweeted in mid-February.

- “Regulating ChatGPT and other Large Generative AI Models” (University of Berlin)

- “Restraining ChatGPT” (University of Hamburg)

- “Powerful AI Is Already Here: To Use It Responsibly, We Need to Mitigate Bias” (U.S National Institute of Standards and Technology)

- “What ChatGPT Reveals About the Urgent Need for Responsible AI” (BCG Henderson Institute)

- “As ChatGPT Fire Rages, NIST Issues AI Security Guidance” (Tenable)

5 - Is ChatGPT coming for your job?

Don’t fret about ChatGPT taking your job. Instead, generative AI tools will help you do your job better, faster, more precisely and differently.

After all, regardless of how sophisticated these tools get, they may always need some degree of human oversight, according to Seth Robinson, industry research vice president at the Computing Technology Industry Association (CompTIA).

“So, when we talk about ‘AI skills,’ we’re not just talking about the ability to code an algorithm, build a statistical model or mine huge datasets. We’re talking about working alongside AI wherever it might be embedded in technology,” he wrote in the blog “How to Think About ChatGPT and the New Wave of AI.”

Meanwhile, investment bank UBS said in a recent note: “We think AI tools broadly will end up as part of the solution in an economy that has more job openings than available workers.”

For cyber pros specifically, the desired skillset will involve knowing how to counter AI-assisted threats and attacks, which requires understanding “the intersection of AI and cybersecurity,” reads a blog post from tech career website Dice.com.

More information:

- “Why security teams are losing the AI war” (VentureBeat)

- “ChatGPT and more: What AI chatbots mean for the future of cybersecurity” (ZDNet)

VIDEOS

Is OpenAI Chat GPT3 Coming For My Cybersecurity Job? (Day Cyberwox)

Cybersecurity jobs replaced by AI? (David Bombal)

6 - Definitely keep tabs on generative AI – it’s not going away

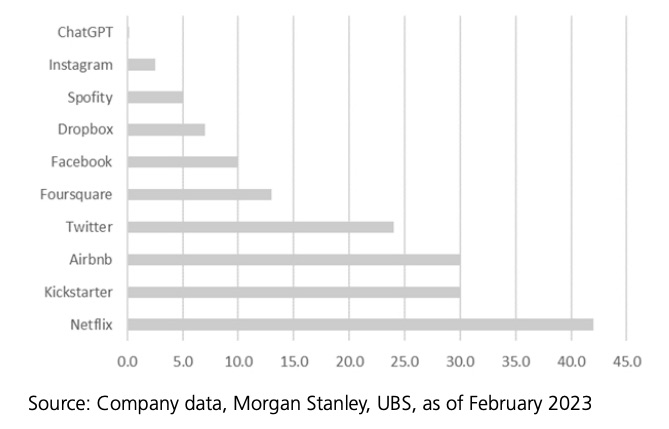

ChatGPT reached 100 million monthly active users barely two months after its launch, becoming the fastest growing consumer app ever, according to UBS researchers.

Months It Took to Reach 1 Million Users for Each Application

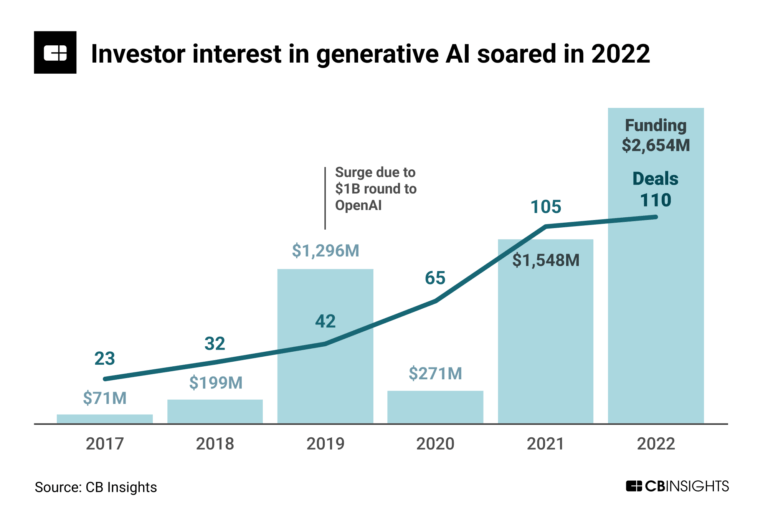

And it’s not just OpenAI. Venture capital funding for generative AI startups in general spiked in 2022, according to CB Insights, which has identified 250 players in this market.

Already companies are incorporating OpenAI technology into their products and operations. For example, Microsoft, which made a multi-billion dollar investment in OpenAI, is building the startup’s generative AI technology into its products, including the Bing search engine. Meanwhile, Bain & Co. uses the OpenAI tech internally while also working with clients interested in adopting OpenAI tools like ChatGPT, including The Coca-Cola Company.

In short, ChatGPT and generative AI tools in general are here to stay, and they’ll have a broad impact across our personal and professional lives. It’s a matter of time until ChatGPT-like technology gets incorporated into defender cybersecurity tools that can be trusted to consistently perform with an acceptable level of accuracy and precision.

More information:

- “You'll Be Seeing ChatGPT's Influence Everywhere This Year” (Cnet)

- “ChatGPT in Cybersecurity: Benefits and Risks” (Nuspire)

- “ChatGPT Is a Tipping Point for AI” (Harvard Business Review)

- “How ChatGPT signals new productivity potential in the digital workplace” (Omdia)

- “How tools like ChatGPT could change your business” (McKinsey)

VIDEOS

Why OpenAI’s ChatGPT Is Such A Big Deal (CNBC)

Satya Nadella: Microsoft's Products Will Soon Access Open AI Tools Like ChatGPT (Wall Street Journal)

- Cybersecurity Snapshot

- Exposure Management

- Legislation

- Malware

- Public Policy